Azure Blob Storage — Highly Scalable, Secure & Supports Lifecycle Management

Short URL: cloudknowledge.in/azure-blob-storage-guide

Quick summary: Azure Blob Storage is Microsoft’s massively scalable object storage for unstructured data with tiering, lifecycle management, enterprise security (encryption, RBAC, private endpoints), ADLS Gen2 capabilities, monitoring and integrations with analytics platforms like Synapse and Databricks. (Official docs: Microsoft Azure Blob Storage).

Overview: What is Azure Blob Storage?

Azure Blob Storage is Microsoft's object storage solution designed for storing massive amounts of unstructured data — images, logs, backups, media, big-data files and more. It provides multiple tiers, built-in lifecycle management, strong encryption, redundancy options, and integrates with analytics and compute services for enterprise workloads.

- Optimized for unstructured data at cloud scale.

- Multiple access tiers (Hot, Cool, Archive) for cost vs performance.

- Data durability and redundancy options for business continuity.

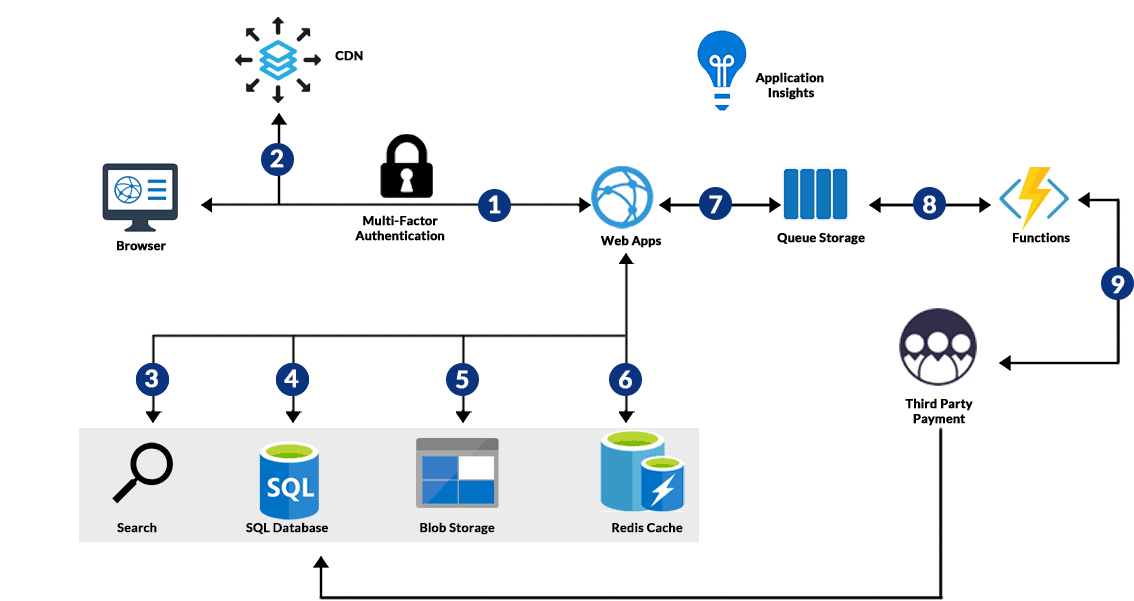

- Seamless integration with analytics (Synapse, Databricks) and serverless (Functions, Event Grid).

1. Massively Scalable Object Storage

Azure Blob Storage scales to petabytes and beyond with parallel upload/download patterns, chunking, and high-throughput APIs suitable for HPC, ML, IoT and large media workloads. Because the underlying platform distributes objects over many storage nodes, you get high availability and throughput at scale.

Keypoints

- Scale from gigabytes to petabytes without rearchitecting.

- Parallel and chunked uploads (reliable large file transfers up to 5 TB per blob).

- Supports massive concurrency for analytics and streaming workloads.

2. Stores Any Type of Unstructured Data

Use Azure Blob Storage for images, videos, logs, backups, VM disks (VHDs via Page Blobs), application binaries, IoT telemetry, and large datasets for analytics.

Keypoints

- Flexible object model: store binary or text data with metadata and tags.

- Great fit for backups, media streaming, big-data landing zones, and web assets.

3. Three Blob Types for Flexibility

Azure supports three primary blob types to match common patterns:

- Block Blobs — Optimized for streaming and large uploads; best for most general objects (images, video, documents).

- Append Blobs — Optimized for append-only scenarios like logging where data is continuously appended.

- Page Blobs — Random read/write access; used for disks/VHDs and scenarios needing low-latency random operations.

Keypoints

- Choose the blob type based on read/write pattern and performance needs.

- Append blobs are not suited to frequent overwrites.

4. Redundancy Options (LRS, ZRS, GRS, RA-GRS)

Azure offers multiple redundancy and replication configurations to meet durability and availability requirements:

- LRS (Locally-Redundant Storage) — replicates within a single datacenter region.

- ZRS (Zone-Redundant Storage) — replicates across availability zones for regional high availability.

- GRS (Geo-Redundant Storage) — asynchronous replication to a secondary region.

- RA-GRS (Read-Access Geo-Redundant Storage) — GRS with read access to the secondary region.

5. Built-in Lifecycle Management Policies & Intelligent Tiering

Azure Blob Storage provides a policy engine to automate moving blobs between Hot, Cool and Archive tiers and to delete or expire data based on rules such as age, last-modified, or blob index tags. This reduces cost and operational overhead.

Lifecycle management capabilities

- Transition blobs to Cool or Archive after N days since last modification.

- Auto-delete older snapshots or previous versions after a retention period.

- Policies are JSON documents applied to the storage account and evaluated automatically.

Example: JSON lifecycle rule (move logs to cool after 30 days)

{

"rules": [

{

"name": "move-logs-to-cool",

"enabled": true,

"type": "Lifecycle",

"definition": {

"filters": {

"prefixMatch": ["logs/"]

},

"actions": {

"baseBlob": {

"tierToCool": { "daysAfterModificationGreaterThan": 30 }

}

}

}

}

]

}PowerShell example — Set lifecycle policy

# Save the policy JSON to policy.json and then:

Connect-AzAccount

$rg = "myResourceGroup"

$sa = "mystorageaccount"

$policy = Get-Content -Path .\policy.json -Raw

Set-AzStorageAccountManagementPolicy -ResourceGroupName $rg -StorageAccountName $sa -Policy $policyDocumentation and examples for lifecycle policies and monitoring are available on Microsoft Docs.

Keypoints

- Use lifecycle rules to lower storage costs for infrequently accessed data.

- Policies apply at storage account scope and work for block and append blobs.

- Monitor policy actions via metrics and diagnostic logs.

6. Supports Data Lake Capabilities (ADLS Gen2)

When you enable hierarchical namespace on a general-purpose v2 storage account, it becomes ADLS Gen2, combining blob storage scale with file system semantics, ACLs and optimized performance for big data analytics. This is ideal for enterprise data lakes and ETL/analytics workloads.

Keypoints

- Hierarchical namespace — directories and atomic directory operations.

- POSIX-like ACLs for fine-grained access control on files & folders.

- Integrates with Azure Databricks, Synapse, HDInsight and Power Query.

Hierarchical namespace enabled. See Microsoft documentation for step-by-step guidance. 7. Highly Secure — Encryption, RBAC, SAS & Private Endpoints

Security is multi-layered: server-side encryption at rest (service-managed or customer-managed keys), encryption in transit (HTTPS/TLS), Azure AD RBAC for data-plane roles, SAS tokens for scoped temporary access, and Private Link/Private Endpoints for network isolation.

Encryption at rest

By default Azure encrypts data at rest using Microsoft-managed keys. For additional control use Customer-Managed Keys (CMK) stored in Azure Key Vault or Managed HSM. You can apply encryption scopes to containers or requests for granular control.

RBAC & Azure AD integration

- Assign built-in roles: Storage Blob Data Reader, Storage Blob Data Contributor, Storage Blob Data Owner.

- Use Azure AD identities (users, groups, managed identities) instead of account keys where possible.

SAS tokens

Shared Access Signatures grant limited, time-bound permissions (read/write/list) to specific blobs or containers. Use least privilege and short lifetimes for SAS tokens. Audit SAS usage via logs.

Private Endpoints (Private Link)

Use Private Endpoints to map the storage account to a private IP in your VNet — traffic to the storage account flows over the Azure backbone and not the public internet.

Keypoints

- Prefer Azure AD + RBAC and private endpoints over account keys.

- Use CMK in Key Vault for auditability and key lifecycle control.

- Monitor access via diagnostic logs and Alert rules in Azure Monitor.

8. Soft Delete, Versioning & Immutable Storage (WORM)

Blob soft delete and versioning help recover accidental deletes or overwrites. For regulatory compliance, immutable storage (WORM) allows you to set time-based retention and legal holds to prevent object deletion or modification during retention windows.

Keypoints

- Enable soft delete for blobs and containers — recover deleted blobs within retention period.

- Use versioning to keep previous versions and create lifecycle rules to manage versions.

- Immutable storage provides write-once-read-many policies for compliance (e.g., SEC, FINRA scenarios).

9. Optimized for Analytics — Synapse, Databricks & Big Data

Azure Blob Storage (and ADLS Gen2) integrates directly with Azure Synapse Analytics, Azure Databricks, HDInsight and Spark ecosystems for ETL, analytics and ML workloads. Place raw data in blob containers and reference them directly from analytics runtimes for high performance.

Keypoints

- Use ADLS Gen2 for data lake patterns and better analytics performance.

- Mount storage in Databricks or access via abfs/https protocols from Spark jobs.

- Integrate with Synapse pipelines for data movement and orchestration.

10. Network Security — Firewall Rules & Private Access

Azure Storage accounts support firewall rules to limit access to specific IP ranges and service endpoints, and Private Endpoints via Azure Private Link to keep traffic off the public internet.

Keypoints

- Enable storage firewall and allow only trusted IPs or VNets.

- Use Private Endpoints to secure traffic into your virtual network.

- Combine with service endpoints and managed identities for controlled access.

11. Multi-Protocol Access — REST, SDKs, CLI, PowerShell

Developers can access Blob Storage using REST APIs, language SDKs (.NET, Java, Python, Node.js, Go), Azure CLI and Azure PowerShell. Choose the appropriate tool for automation, integration and CI/CD pipelines.

Sample CLI command — Upload a file

az storage blob upload \

--account-name mystorageaccount \

--container-name mycontainer \

--name myfile.txt \

--file ./myfile.txt \

--auth-mode loginSample .NET snippet

// using Azure.Storage.Blobs

var container = client.GetBlobContainerClient("mycontainer");

var blob = container.GetBlobClient("myfile.txt");

await blob.UploadAsync("localfile.txt");Keypoints

- Use Azure SDKs for language-specific patterns and retries.

- Prefer managed identities for automation where possible (no embedded secrets).

12. Integration with Backup & Recovery Solutions

Azure Blob Storage integrates with Azure Backup, MARS agent, MABS and many third-party backup/DR vendors. Snapshot and incremental copy features help implement efficient backup strategies. Incremental copy only transfers changed blocks, saving bandwidth and time.

Keypoints

- Snapshots for quick point-in-time backups of block blobs.

- Incremental copy via AzCopy or SDKs for efficient data transfer.

- Combine with lifecycle and archive tiering for long-term retention.

13. Monitoring & Logging — Azure Monitor Metrics & Diagnostic Logs

Use Azure Monitor to collect metrics (capacity, transactions, egress) and diagnostic logs (read/write/delete) for auditing, security and cost analysis. Set alerts on transaction spikes or unauthorized access patterns.

Keypoints

- Send diagnostic logs to a Log Analytics workspace, Event Hub or secondary storage account.

- Enable Activity Logs and Storage Analytics for request-level telemetry.

14. Typical Enterprise Use Cases

- Data lake for big-data analytics and ML training.

- Media streaming and CDN origin storage for web assets.

- Backups and disaster recovery target.

- Static website hosting for public SPA apps (via $web container).

- IoT telemetry landing zone with event-driven processing.

15. Cost Optimization & Storage Tiers

Azure Blob Storage offers Hot, Cool and Archive tiers. Use lifecycle policies and intelligent tiering to minimize costs. Choose redundancy wisely — higher redundancy often increases cost but reduces risk.

Keypoints

- Hot: for frequent access; highest cost per GB but lowest access cost.

- Cool: lower storage cost, higher access cost — for infrequently accessed data.

- Archive: lowest storage cost, highest rehydrate/access latency — for long-term retention.

- Use lifecycle rules to automate transitions and reduce manual errors.

16. Troubleshooting — PowerShell, CLI & Graph API Examples

Below are practical scripts and runbook snippets to troubleshoot common storage issues: access errors, lifecycle policy validation, listing malformed SAS tokens, and checking encryption scopes.

Check storage account properties (PowerShell)

Connect-AzAccount

$rg = "myResourceGroup"

$sa = "mystorageaccount"

Get-AzStorageAccount -ResourceGroupName $rg -Name $sa | Select-Object -Property StorageAccountName, Location, Kind, Sku, EnableHierarchicalNamespace, EncryptionList lifecycle policy (PowerShell)

Get-AzStorageAccountManagementPolicy -ResourceGroupName $rg -StorageAccountName $sa | ConvertTo-Json -Depth 10Validate private endpoint connections (CLI)

az network private-endpoint list --resource-group myResourceGroup --query "[].{name:name,privateLinkServiceConnections:privateLinkServiceConnections}"Inspect encryption scopes & CMK usage

az storage account show --name mystorageaccount --resource-group myResourceGroup --query "encryption" --output jsonDetect and list large blobs (PowerShell)

Install-Module -Name Az.Storage

Connect-AzAccount

$ctx = (Get-AzStorageAccount -ResourceGroupName $rg -Name $sa).Context

$blobs = Get-AzStorageBlob -Container mycontainer -Context $ctx -IncludeVersions

# Filter for blobs > 100GB

$blobs | Where-Object {$_.ICloudBlob.Properties.Length -gt 100GB} | Select Name, @{Name='SizeGB';Expression={[math]::Round($_.ICloudBlob.Properties.Length/1GB,2)}}Audit SAS tokens (example)

When auditing, search diagnostic logs for “SAS” usage patterns (anonymous read spikes, unexpected IPs). Export storage diagnostic logs to a Log Analytics workspace and query.

AzureDiagnostics

| where Category == "StorageWrite" or Category == "StorageRead"

| where OperationName contains "GenerateAccountSas" or Authorization == "Anonymous"Troubleshooting checklist

- Verify account keys vs. Azure AD RBAC (avoid using account keys in automation).

- Check firewall & private endpoint rules for blocked source IPs.

- Confirm encryption scope and CMK permissions in Key Vault if you see encryption errors.

- Use Azure Storage metrics for increased egress/transactions to identify exfiltration.

FAQs — Common Questions about Azure Blob Storage

A: ADLS Gen2 enables hierarchical namespace and POSIX-like ACLs on top of Blob Storage, improving performance for big-data workloads.

A: Hot for frequently read/written data, Cool for infrequently accessed data, Archive for rarely accessed long-term retention. Use lifecycle policies to automate transitions.

A: Yes — use Customer-Managed Keys (CMK) stored in Azure Key Vault or Managed HSM to control encryption keys.

A: Zone-Redundant Storage (ZRS) offers high availability within a region; RA-GRS + GRS provide geo-failover capabilities for region-wide disasters.

More FAQs & Troubleshooting

See the Azure Blob Storage docs for complete guidance, limits, and step-by-step examples.

References & Further Reading

- Azure Blob Storage documentation (Microsoft).

- Blob lifecycle management overview.

- ADLS Gen2 hierarchical namespace.

- Customer-managed keys for Azure Storage.

- Encryption scopes for Blob Storage.

- Internal reference: CloudKnowledge — link internal pages for related tutorials & downloads.

Leave a Reply