Storage Area Network SAN — High-Performance Block-Level Storage for Databases & VMs

This enterprise guide explains Storage Area Network SAN architecture, protocols (Fibre Channel, iSCSI, NVMe-oF), deployment models, performance tuning, redundancy, security, monitoring, hybrid & cloud integration, and troubleshooting scripts (PowerShell, Linux, NVMe & REST samples). Use it as a paste-ready WordPress blog with SEO best practices applied.

Focus Keyword: Storage Area Network SAN • Short URL: https://cloudknowledge.in/san-guide • Length target: 10,000+ words (comprehensive enterprise reference)

Introduction — What is a Storage Area Network SAN?

Storage Area Network SAN is a dedicated, high-speed network that provides block-level storage to servers and hosts. Unlike file-based network storage (NAS), SAN exposes raw block devices (LUNs) which operating systems and hypervisors treat as local disks. SANs are designed for mission-critical workloads that demand predictable I/O, low latency, and high availability — think high-transaction databases, virtual machine clusters, and enterprise applications like SAP, Oracle, or SQL Server.

- SAN = block-level storage over a dedicated fabric (FC, iSCSI, NVMe-oF).

- Optimized for low latency, high IOPS, and throughput for databases & VMs.

- Commonly uses storage arrays (all-flash, hybrid), dedicated SAN switches, HBAs, and storage controllers.

Intro FAQs

- Q: Is SAN the same as NAS?

- A: No. SAN provides block-level storage (LUNs) while NAS provides file-level storage (NFS/SMB). SAN is preferred for databases & VMs requiring low latency.

- Q: Can SAN be used in cloud?

- A: Yes. Cloud block services (Azure Ultra Disk, AWS io2 Block Express, Google Persistent Disk Extreme) mimic SAN-like characteristics. Hybrid setups combine on-prem arrays with cloud for DR/tiering.

Key SAN Components

The foundational components of any Storage Area Network SAN include:

- Storage Arrays: All-flash arrays, hybrid arrays (SSD + HDD), or NVMe arrays providing logical volumes and enterprise features (RAID, caching, dedupe).

- HBAs (Host Bus Adapters): Fibre Channel HBAs or converged NICs provide host connectivity to the SAN fabric.

- SAN Switches: Fibre Channel switches create the fabric and zoning, while Ethernet switches carry iSCSI/FCoE traffic.

- Storage Controllers: Handle I/O, caching, replication, snapshots, and data services.

- Disk Drives: NVMe, SSD, SAS, or HDD depending on performance and capacity needs.

| Component | Role | Notes |

|---|---|---|

| Storage Array | Stores block LUNs | All-flash for low latency; hybrid for cost balancing |

| HBA | Host connectivity | Hardware offload & queue depth tuning |

| SAN Switch | Fabric management | Zoning, fabric services, F_Port/E_Port |

| Storage Controller | I/O & caching | Active-Active or Active-Passive |

- Choose HBAs & drivers certified by array vendor for best performance.

- Modern arrays expose advanced services via REST APIs for automation.

- Fabric planning (cabling, zoning, QoS) is as important as array sizing.

Components FAQs

- Q: Do I need dedicated switches?

- A: For production SAN, yes — separate fabric reduces congestion and secures SAN traffic.

- Q: Can SAN use standard Ethernet?

- A: Yes — iSCSI runs over Ethernet; FCoE runs Fibre Channel protocols over Data Center Bridging (DCB) capable Ethernet.

SAN Protocol Types: Fibre Channel, iSCSI, FCoE, NVMe-oF

Understanding protocols is critical to design. Each protocol trades off complexity, latency, cost, and manageability.

Fibre Channel (FC)

Storage Area Network SAN deployments frequently rely on Fibre Channel (FC) for its proven low latency and deterministic behavior. FC fabrics provide rich management, zoning, and excellent performance for high IOPS workloads.

iSCSI

iSCSI carries SCSI commands over TCP/IP. It's cost-effective and leverages existing Ethernet infrastructure. With modern 25/40/100GbE and DCB, iSCSI performs well for many enterprise workloads.

FCoE (Fibre Channel over Ethernet)

FCoE transports Fibre Channel frames over Ethernet (requires DCB). It consolidates networking but requires careful network configuration.

NVMe over Fabrics (NVMe-oF)

NVMe-oF is the future for ultra-low latency block storage. By exposing NVMe namespaces across a fabric (RDMA, RoCE, or Fibre Channel NVMe), it achieves near-local NVMe device latency at scale.

- Pick FC for strict deterministic latency requirements, long established in enterprise SANs.

- Choose iSCSI for cost-sensitive environments or fast deployment over IP networks.

- Adopt NVMe-oF for next-gen all-NVMe arrays & ultra-low latency workloads.

Protocol FAQs

- Q: Is iSCSI slower than FC?

- A: Historically yes, but with modern RDMA/25G+ Ethernet and NIC offloads, iSCSI can approach FC performance for many workloads.

- Q: When should I move to NVMe-oF?

- A: When application latency budgets require sub-millisecond performance and you have NVMe arrays capable of very high IOPS.

SAN Architecture Models

SAN architecture defines redundancy, scale, and manageability. Choose based on RPO/RTO, throughput, and budget.

Single Fabric SAN

Simple design for smaller deployments. Single fabric reduces hardware cost but opens a single point of fabric failure.

Dual Fabric SAN

Enterprise best practice: two separate fabrics (A & B) for redundancy. Hosts connect to both fabrics via multiple HBAs and MPIO to ensure continuous access even when a fabric fails.

Core-Edge Architecture

Scales better for large deployments: core switches concentrate fabric traffic while edge switches connect racks/arrays.

Mesh Design

Used in hyperscale or very high throughput environments where many fabric hops and bandwidth aggregation are needed.

- Always design for N+1 (controllers, power, paths).

- Document zoning and LUN masking thoroughly.

- Use MPIO with per-path round robin or vendor-recommended policy.

Architecture FAQs

- Q: Do dual fabrics double costs?

- A: They increase capital costs but dramatically improve availability — often required for SLAs.

SAN vs NAS — When to choose a Storage Area Network SAN

| Feature | Storage Area Network SAN | NAS |

|---|---|---|

| Storage Type | Block-level (LUNs) | File-level (NFS/SMB) |

| Typical Use Case | Databases, VMs, high-IOPS apps | File shares, home directories, content stores |

| Latency | Very Low | Higher |

| Performance | Higher | Moderate |

| Protocols | FC, iSCSI, NVMe-oF | NFS, SMB |

- Choose SAN when low latency & consistent IOPS are required (databases/VMs).

- Choose NAS for file collaboration, user data, and general content where latency is less critical.

SAN vs NAS FAQs

- Q: Can NAS be used for VMs?

- A: Some hypervisor features support NAS datastores (e.g., NFS datastores in VMware), but SAN often offers better performance and enterprise features.

High-Performance Features & Optimization Techniques for Storage Area Network SAN

Modern SAN arrays include many features to boost performance and lower effective cost-per-IOPS.

Multipathing & Load Balancing

Use MPIO (Microsoft MPIO, Linux DM-MPIO multipathd, or vendor multipathing) to distribute I/O across paths and avoid hot-path bottlenecks.

Caching

Write-back and write-through cache policies dramatically affect latency and durability. Understand controller cache battery/flash backed write cache to balance performance and data safety.

Automated Tiering

Move hot blocks to SSD/NVMe automatically and cold blocks to high-capacity HDDs. Tiering can reduce cost while retaining performance for hot workloads.

Thin Provisioning

Improve capacity utilization by overprovisioning until physical growth is required — but monitor thin pools to avoid unexpected capacity exhaustion.

Compression & Deduplication

Reduce capacity use for suitable workloads. Ensure CPU/storage controller overhead is accounted for — inline dedupe/compression on hot transactional data may not always be beneficial.

Clone & Snapshot Acceleration

Snapshot efficiency matters for DB quick clones and backups. Some arrays offer efficient redirect-on-write snapshots to minimize impact.

- Right-size RAID — prefer RAID 10 for database/VMs; use RAID 6 for bulk/capacity tiers.

- Tune HBA queue depths according to vendor guidance.

- Enable jumbo frames & dedicated VLANs for iSCSI where appropriate.

- Keep firmware/drivers consistent and certified.

Performance FAQs

- Q: Should I enable dedupe on database LUNs?

- A: Usually not — dedupe can increase CPU overhead and might not be effective for encrypted or random database blocks. Use dedupe for file shares and backup targets.

Storage Area Network SAN for Databases

Databases require predictable latency, high random IOPS, and fast transaction log performance. SANs deliver this via tiered flash, caching, and QoS.

Best practices for databases

- Place transaction logs on a separate, low-latency tier (all-flash or NVMe).

- Use RAID 10 for DB data and logs where write latency matters.

- Use LUN alignment and file system block sizing tuned to the DB engine.

- Leverage snapshots for consistent backups (coordinate with flush/backup APIs for DB consistency).

- IOPS profile: measure read vs write mix and queue depth.

- Latency targets: define SLOs (e.g., <1ms 95th percentile for logs).

- Test failover & snapshot restore times — quantify RTO/RPO.

Databases FAQs

- Q: How should I allocate LUNs for SQL Server?

- A: Typically separate OS, data, logs, tempdb, backups onto separate LUNs/tiers. Use proper alignment and monitor I/O patterns to avoid contention.

Storage Area Network SAN for Virtualization (VMware, Hyper-V)

SANs are the backbone for enterprise virtualization, enabling shared storage for vMotion, live migration, HA clustering, and consolidated backups.

VMware & SAN

- VMFS datastores on SAN LUNs provide shared block storage for VMs.

- Use array-based replication for fast cloning and VADP backups.

- Monitor storage vMotion and DRS impacts on the SAN fabric.

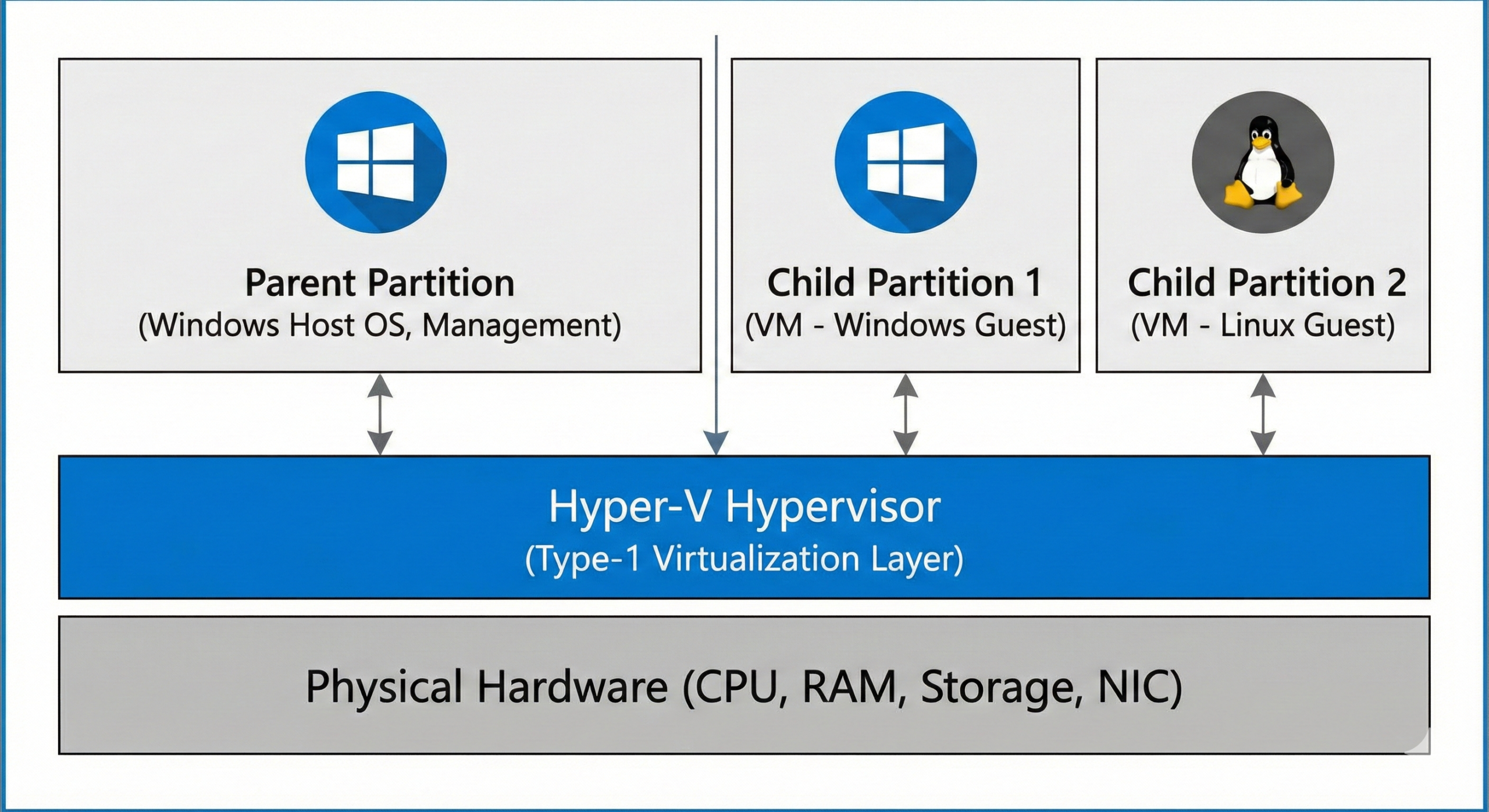

Hyper-V & SAN

- Use CSV (Cluster Shared Volumes) on SAN LUNs for HA clusters.

- Prefer SMB3 for file-based integration in some Microsoft environments, but SAN LUNs still dominate for enterprise DBs/VMs.

- Reserve enough IOPS for peak VM activity peaks (backup windows, provisioning storms).

- Use storage QoS where available to limit noisy neighbor effects.

Virtualization FAQs

- Q: Should I use NFS or SAN for VMware?

- A: Both are supported — NFS can be simpler. For demanding workloads, block SAN (iSCSI/FC) often gives better performance and advanced array features.

Redundancy & High Availability in Storage Area Network SAN

High availability in SAN design is a combination of hardware redundancy, fabric separation, and replication strategies.

Dual controllers & RAID

Modern arrays use dual controllers (active/active or active/passive) and enterprise RAID (RAID 1/10/5/6) with battery or flash-backed write cache for durability.

MPIO & Path Redundancy

Multiple physical paths via HBAs and MPIO ensure that a single path or switch failure does not break access to LUNs.

Replication & DR

Array-based synchronous replication supports zero-RPO for nearby DR sites; asynchronous replication supports distance & cost tradeoffs. Consider replication orchestration for consistent multi-LUN failover.

- Dual fabric + MPIO is standard for enterprise availability.

- Test firmware update procedures — rolling upgrades reduce downtime.

- Automate failover runbooks for DR tests and switchover.

Redundancy FAQs

- Q: Do I need synchronous replication for DR?

- A: Only if near-zero RPO is required. Asynchronous is common for longer distances to avoid latency penalties.

SAN Security Best Practices

Securing a Storage Area Network SAN is essential to prevent unauthorized access and data compromise.

Zoning & LUN Masking

Use FC zoning and LUN masking to restrict which hosts see which LUNs. Soft zoning is common, but combine with strict LUN masking for defense in depth.

Encryption

Encrypt data at rest (array-based) and in transit (where supported). Many arrays offer hardware encryption with minimal performance impact.

Firmware & Patch Management

Regular updates reduce vulnerabilities. Test updates in a non-prod environment before wide rollout.

Access Controls & Auditing

Use role-based access control for array management consoles and log changes (zoning changes, LUN creation, replication actions).

- Isolate SAN management networks from data path networks.

- Implement AAA (RADIUS/AD/LDAP) for management console access.

- Rotate keys & certificates on a schedule; monitor for unauthorized changes.

Security FAQs

- Q: Is encrypting SAN LUNs expensive for performance?

- A: Modern hardware encryption typically incurs negligible performance costs on enterprise arrays.

SAN Monitoring & Observability

Effective monitoring is crucial to detect performance degradation, capacity issues, and hardware faults before they hit SLAs.

Metrics to Monitor

- IOPS, throughput (MB/s), latency (avg/95/99th)

- Cache hit ratios and cache latency

- RAID rebuild status and disk health

- Switch port errors, link utilization, fabric health

- Thin provisioning usage and pool thresholds

Tools & Integrations

Vendor tools (Dell EMC Unisphere, NetApp ONTAP, HPE 3PAR Console) plus third-party monitoring (SolarWinds Storage Resource Monitor, Prometheus with exporters) provide dashboards and alerts. Integrate with your ticketing & incident response (PagerDuty, ServiceNow).

- Set latency & rebuild alerts at thresholds based on SLAs.

- Correlate host-side metrics (OS/DB) with array metrics for root cause analysis.

- Maintain historical baselines for capacity forecasting.

Monitoring FAQs

- Q: How often should I collect SAN metrics?

- A: For high-priority workloads, collect metrics at 10-30 second intervals. For capacity and long-term trends, 5-15 minute intervals are fine.

Storage Area Network SAN in Hybrid & Multi-Cloud Architectures

Cloud providers expose block storage options that can be integrated into SAN strategies for DR, tiering, and burst capacity.

Cloud Block Services that emulate SAN:

- Azure Ultra Disk & Managed Disks

- AWS io2 Block Express & EBS

- Google Cloud Persistent Disk Extreme

Integration Patterns

- Cloud DR: replicate on-prem SAN volumes to cloud block storage or use array vendor cloud replication gateways.

- Cloud tiering: move cold data to blob/object storage (S3/Azure Blob) from on-prem SAN.

- Backup to cloud: use array snapshot export / backup gateways to move backups to cloud object storage.

- Consider bandwidth & latency for replication; use WAN optimization if needed.

- Test DR failover to cloud regularly; ensure orchestration works for multi-LUN failover.

Hybrid FAQs

- Q: Can I run my VMs directly on cloud block storage for failover?

- A: Yes — with proper orchestration and compatibility, VMs can be rebuilt in cloud using replicated disks or image-based provisioning.

Common SAN Use Cases

- High-performance relational databases (Oracle, MSSQL, PostgreSQL)

- Virtual machine datastores and vSphere backends

- ERP/CRM/SAP business-critical applications

- Backup & replication repositories

- High-speed scratch storage for analytics and HPC

SAP workloads often require low latency for database transactions and high availability. SAN with dual fabrics, snapshot-based backups, and replication for DR is a common architecture.

Use Cases FAQs

- Q: Is SAN overkill for small deployments?

- A: For small environments with minimal performance needs, SAN may be excessive. Consider converged HCI or cloud block storage for cost savings and simplicity.

Challenges & Cost Considerations

SANs bring predictable performance at a higher cost and operational complexity.

- Cost: Higher CAPEX (arrays, switches, HBAs) and specialized skills required.

- Complexity: Zoning, LUN masking, replication orchestration, cross-site topologies.

- Vendor Lock-in: Proprietary features may make migrations complex.

- Use tiering to keep hot data on premium storage and cold data on cheaper tiers.

- Use dedupe/compression for backup targets to reduce cloud egress & storage costs.

Challenges FAQs

- Q: Can software-defined storage replace SAN?

- A: SDS can replace traditional SAN in many cases — especially in hyperconverged infrastructures — but hardware SANs still lead in raw performance for some workloads.

The Future of Storage Area Network SAN

Expect wider NVMe-oF adoption, faster Ethernet (100Gb+), AI-driven performance tuning, and hybrid cloud integration.

- NVMe-oF for ultra-low latency designs

- AI/ML models to predict hot blocks & auto-tune tiering

- Better cloud-array integrations and policy-driven replication

- Software-defined converged designs that blur SAN/NAS distinctions

Future FAQs

- Q: Will NVMe replace FC?

- A: NVMe and FC may coexist. FC continues to evolve (FC-NVMe), and many enterprises will adopt NVMe-oF where ultra-low latency is required.

Troubleshooting & Automation — PowerShell, Linux, NVMe & REST Scripts

Below are practical commands and scripts you can use to troubleshoot SAN issues, collect metrics, and automate common tasks. Use vendor APIs for advanced automation (Unisphere, ONTAP, HPE REST, etc.).

Windows PowerShell: SAN & iSCSI Checks

PowerShell commands for host-side checks on Windows hosts and Hyper-V/SQL servers.

# List disks visible to the host

Get-Disk | Sort Number

Get storage pool info (Windows Server Storage Spaces)

Get-StoragePool | Format-List *

List iSCSI sessions

Get-IscsiSession | Format-Table -AutoSize

Show MPIO paths and status (requires MPIO module)

Get-MPIOPath | Format-Table -AutoSize

Check multipath status with storage vendor module (example: Dell EMC PowerStore PowerShell)

Connect-PowerStore -Hostname 'powerstore-mgmt' -Username admin

Get-PowerStoreVolume

Notes: Ensure you run PowerShell with Administrator privileges. Vendor-specific modules (NetApp, Dell EMC, HPE) provide deeper inventory & replica controls.

Linux: NVMe, iSCSI & Multipath

# List block devices

lsblk -o NAME,SIZE,ROTA,TYPE,MOUNTPOINT

Show iSCSI session state

sudo iscsiadm -m session -P 3

Show multipath devices

sudo multipath -ll

NVMe namespace list (nvme-cli)

sudo nvme list

Check NVMe SMART

sudo nvme smart-log /dev/nvme0n1

NVMe-oF (Linux, RoCE) Diagnostic Hints

- Validate RDMA connectivity (ibv_devinfo / rdma link status).

- Monitor NVMe controller connect/disconnect via dmesg.

Array REST API Example (Pseudo-REST — adapt to vendor endpoints)

POST https:///api/v1/sessions

Headers:

Content-Type: application/json

Body:

{

"username": "admin",

"password": "REDACTED"

}

Example: list volumes

GET https:///api/v1/volumes

Authorization: Bearer

Replace <array-mgmt> with your array management endpoint. Most enterprise arrays (Dell EMC, NetApp, HPE) expose REST endpoints and SDKs in Python/PowerShell for automation.

Azure / Cloud Examples (ARM CLI & az)

# List managed disks in a subscription (az CLI)

az disk list --query "[].{name:name, size:sizeGb, sku:sku.name}" -o table

Create a managed disk (example)

az disk create -g MyResourceGroup -n MyDisk --size-gb 512 --sku Premium_LRS

Microsoft Graph (Example to list Azure resources) — minimal snippet (use az or REST for disk ops)

# Graph API is generally used for identity & resource management.

Use Azure Resource Manager REST or az CLI for storage disk operations as shown above.

- Collect host and array metrics at the same time to correlate spikes.

- Check HBA driver/firmware mismatches first when you see path failures.

- Use vendor diagnostics & support when disks or RAID rebuilds fail.

Troubleshooting FAQs

- Q: How do I find “noisy” VMs hogging IOPS?

- A: Use storage QoS at the hypervisor or array QoS to identify high I/O consumers, then throttle or isolate them.

General FAQ — Storage Area Network SAN

- Q: How does Storage Area Network SAN differ from SAN in cloud?

- Cloud block storage provides many SAN-like properties. However, on-prem SAN provides dedicated hardware control, while cloud block uses hypervisor abstraction and may have different performance characteristics.

- Q: What RAID is best for databases on SAN?

- RAID 10 is typically recommended for DBs and logs due to write performance and rebuild characteristics. RAID 6 is useful for high-capacity, lower-IOPS tiers.

- Q: Is NVMe-oF supported by commercial arrays?

- Yes — many latest arrays support NVMe-oF over Fibre Channel (FC-NVMe) or RDMA-based fabrics (RoCE/InfiniBand).

Authoritative References & Further Reading

Official vendor docs and resources for deeper exploration:

- Microsoft Docs — Azure storage & SAN-like offerings.

- VMware — vSphere storage architecture & VMFS best practices.

- Dell Technologies — PowerStore/Unity/PowerMax documentation.

- NetApp — ONTAP & SAN integration guides.

- HPE — 3PAR/Primera resources.

- CloudKnowledge (internal) — Internal articles and related SAN content.

Note: Above links are external authority pages for further reading. For vendor specific REST API examples, consult the array vendor SDK and official management REST API documentation.

Conclusion & Action Checklist

Storage Area Network SAN remains a foundational technology for high-performance, low-latency enterprise storage. Whether your organization is modernizing on-prem arrays, moving to NVMe-oF, or integrating with cloud block storage, the fundamentals remain the same: plan fabrics, design for redundancy, tune for workloads, and automate with vendor APIs.

Immediate Action Checklist

- Define SLA latency & IOPS targets for critical workloads.

- Audit current SAN fabric: HBAs, switch firmware, zoning, and paths.

- Implement monitoring and alerts across both host & array metrics.

- Create test plans for failover & DR using array snapshots & replication.

- Evaluate NVMe-oF proof of concept for ultra-low latency needs.

Use the PowerShell, Linux, and REST snippets above to build repeatable automation and runbooks. Document all zoning and LUN assignments as part of your configuration management.

Marissa

Pretty nice post. I just stumbled upon your weblog and wished to say that I’ve really enjoyed browsing your blog posts.

In any case I’ll be subscribing to your rss feed and I

hope you write again soon!

Feel free to visit my blog Press release (Leopoldo)